By Otto Spijkers

Instead of talking about animal rights, as a response to the article of co-blogger Richard Norman, I would like to write about robot rights. Next week, a group of experts will come together in Rome to discuss Roboethics (for an overview of some of their ideas, see here). Here is a starter to the robot rights debate.

Later this year, a group of South Korean scientists will come up with a "Robot Ethics Charter."

The ‘Robot Ethics Charter’, which will be unveiled later this year, will insist that humans should not exploit robots and should use them responsibly. It is expected to be a version of the classic three laws of robotics developed by the science fiction author Isaac Asimov. These are that robots must not harm people, and that they must obey orders and protect their own existence unless either conflicts with the first law.

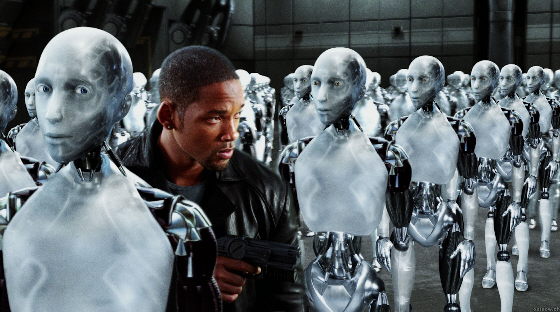

As we could see in the movie "I, Robot", Isaac Asimov’s three laws, like any law, can be interpreted in such a way that they lead to unacceptable outcomes. Robots may not have a conscience, but they can learn, and connect one law with another and reach conclusions independently. In the end, interpreting the law is all about using common sense, and about finding a way to interpret the rules in accordance with the principles they aim to protect. But can robots do this? If they do not have common sense, then perhaps it is wise to add a few more rules?

Instead of only imposing duties on robots, perhaps we should give them some rights too? Although I am not an expert, I guess the story of robot rights is similar to that of animal rights. Human beings, the powerful in both cases, must decide for themselves whether they wish to answer to robots or animals, the powerless, for the effect they have on these robots or animals, particularly if negative. Robots or animals cannot force human beings to do so. Human beings can decide to do so either to preserve their own dignity, or to respect the dignity of the robot or animal, or to do both. Whether robots or animals have ‘natural rights’ or whether these rights are created by human beings is not relevant; what is relevant is that the powerful (human beings) acknowledge and respect these rights. Of course, what is missing is an accountability mechanism through which robots or animals can actually ensure respect for their rights and change the behavior of the human beings. Animals have never been successful in this respect, but according to the BBC, a UK government study predicted that in the next 50 years robots could demand the same rights as human beings. The BBC is probably referring to the following paper: Utopian dream or rise of the machines?

Instead of only imposing duties on robots, perhaps we should give them some rights too? Although I am not an expert, I guess the story of robot rights is similar to that of animal rights. Human beings, the powerful in both cases, must decide for themselves whether they wish to answer to robots or animals, the powerless, for the effect they have on these robots or animals, particularly if negative. Robots or animals cannot force human beings to do so. Human beings can decide to do so either to preserve their own dignity, or to respect the dignity of the robot or animal, or to do both. Whether robots or animals have ‘natural rights’ or whether these rights are created by human beings is not relevant; what is relevant is that the powerful (human beings) acknowledge and respect these rights. Of course, what is missing is an accountability mechanism through which robots or animals can actually ensure respect for their rights and change the behavior of the human beings. Animals have never been successful in this respect, but according to the BBC, a UK government study predicted that in the next 50 years robots could demand the same rights as human beings. The BBC is probably referring to the following paper: Utopian dream or rise of the machines?

– Otto

Dear Sarah,

No, I am not a robot. I defend robot rights not because I believe humans and robots and animals are all equal (whatever that means), but mainly because mistreating robots would be an assault on human dignity.

Otto

are you a robot

I’m never really sure what people mean when they say soul, but I’m pretty sure a computer does not have one. You raise an interesting point about intercourse with a robot, but as I see it that sounds like just an improved blow-up doll. However, we should be concerned about the future of prostitution, and maybe think about re-training programs for prostitutes around the world – maybe they can get call center jobs!

Do you think that the existence of a central nervous system is the key issue here? What about “a soul”? In my view, the key question is indeed the one you raised at the end of your comment: What the hell does exploiting a robot even mean? I think it is more related to human dignity than robot dignity. For example, the (future) possibility of a human having intercourse with a robot may raise issues of human dignity rather than robot dignity.

Are you really serious Otto?

I am reminded of the GM superbowl commercial:

http://www.youtube.com/watch?v=B3NGN4t4hm4

There was some protest at this commercial, given the obvious connection of robotization of car factories and unemployment amongst human workers (and the connection this has to depression, suicide, etc.)

Only the Koreans would come up with something this ridiculous. At least animals have a central nervous system. The Canadian philosopher and social activist John McMurtry has an interesting theory about the Life Economy, where we can make moral and ethical judgments about humans and animals on the basis of how much they feel – the more developed an animals central nervous system (as measured by brain size, length of nerves, and other scientific criteria) the higher respect we must accord it. On this basis, robots get 0.

What the hell does exploiting a robot even mean?